Holmes.Appendices

Reading: Holmes, T. & Casotto, M, “Penalized Regression and Lasso Credibility,” CAS Monograph #13, November 2024. Chapter 8 and Appendices.

Synopsis: This is a quick review of Holmes and Casotto's concluding chapter plus the four appendices in the source material. Most of the content has been already covered in the earlier wiki articles. The main focus is on demonstrating penalized regression and lasso credibility may be viewed as valid credibility procedures.

Study Tips

- Read this briefly and then move on to more important material.

Estimated study time: 1 Hour (not including subsequent review time)

BattleTable

Based on past exams, the main things you need to know (in rough order of importance) are:

- How to frame penalized regression and lasso credibility in the context of ASOP 25.

- How to rebase the model output.

Questions from the Fall 2019 exam are held out for practice purposes. (They are included in the CAS practice exam.)

reference part (a) part (b) part (c) part (d) Currently no prior exam questions

| Full BattleQuiz | Excel Files | Forum |

You must be logged in or this will not work.

In Plain English!

In Chapter 8, Holmes and Casotto offer a brief summary of why lasso credibility is powerful but there's nothing that we've not already covered.

The source includes four appendices and the current CAS syllabus offers no guidance as to whether the material contained in them is directly testable. We proceed through each of them now for completeness.

Appendix A: Bayesian Interpretation of Credibility

We've already pulled out the relevant material from this appendix and included it within the prior wiki articles. The material covered in this section is both mathematically dense and specific to a particular set of circumstances.

All you probably need to know is we can justify the claim that GLMs assign 100% credibility to the data and we can think of credibility as a Bayesian perspective where we're incorporating prior knowledge about the model. Penalized regression is a nice way of introducing Bayesian thinking to standard actuarial techniques which allows actuaries to bridge the gap between traditional statistical methods and machine learning techniques.

Appendix B: Alignment of Lasso Credibility with ASOP 25

This the most important of the appendices and quite likely to be tested in our opinion. Holmes and Casotto begin by justifying lasso credibility is a form of credibility procedure. As you've seen in ASOP25.Credibility, a credibility procedure involves is a process which involves either evaluating subject experience for the potential use in setting assumptions without reference to other data or, the identification of relevant experience and the selection and implementation of a method for blending the relevant experience with the subject experience. It is the latter situation which penalized regression and lasso credibility fall under.

Penalized regression blends relevant and subject experience together in a way which minimizes the sum of the negative log-likelihood and the penalty term. The subject experience is the observed/experienced outcome for a category within a rating variable and the relevant experience is the overall experience across all categories within the rating variable. Penalized regression measures credible deviations of the categories within the rating variable from the overall performance of that variable. So penalized regression is a credibility procedure. By extension, lasso credibility is also a credibility procedure where the offset/complement is now an actuarially sound set of data which is predictive of the parameter under study. The key here is we must use an actuarially sound complement in order to have predictive power in order for us to have a valid credibility procedure.

ASOP 25 emphasizes The actuary should use care in selecting the relevant experience, i.e. when choosing a valid complement/offset. In the absence of other information, a complement coefficient of 0 (relativity of 1.000 when a log-link is used) is appropriate.

Further, the relevant experience (complement) should have characteristics similar to the subject experience. With no prior knowledge and a sufficiently homogeneous data set, a complement coefficient of zero adequately meets this. For lasso credibility, we must be more careful and ensure we choose offsets which are derived from sources which have similar demographics, coverages, frequency, severity, or other risk characteristics. For example, using the countrywide model output as the input for the individual state model is okay, but using say the relativities from a non-standard auto insurer as the offset for a preferred auto book of business would be highly questionable.

Another point ASOP 25 requires us to consider is whether penalized regression and lasso credibility procedures appropriately consider the characteristics of both the subject experience and relevant experience. The penalty parameter and its associated tuning determines how much credibility (weight) is given to each of these and each model is fitted using a form of maximum likelihood estimation. So this point is met as well for both penalized regression and lasso credibility.

Lastly, the actuary should consider the extent to which subject experience is included in relevant experience. This requires a judgment call by the actuary. When Holmes and Casotto modeled the large state using countrywide experience as the complement, the large state was constructed to have 500,000 observations out of 3,500,000 total observations (14.3%). This is a sufficiently small percentage; had it been something like 50% then the countrywide experience may well have looked highly similar to the large state experience, causing the large state model to essentially look for deviations from itself. It's vital that the modeler/actuary actively consider this requirement at the start of each modeling project.

Alice: "If we choose the offsets to be zero (penalized regression) then our subject experience is truly independent of the relevant experience."

One final consideration is whether the procedure is practical to implement while taking into consideration both the cost and benefit of employing a procedure. Holmes and Casotto acknowledge both penalized regression and lasso credibility come with additional computational costs, particularly due to the need to perform extensive cross-validation to tune the lambda parameter. The falling cost of cloud computing, the benefit of improved rating accuracy, and the difficulty of building a reasonable GLM on small data sets all combine to make penalized regression and lasso credibility practical to implement.

Appendix C: Miscellaneous

There are three parts to this appendix: rebasing models, near aliasing, and AIC. We have already covered the last two here and here, so we focus here on rebasing models.

According to Holmes and Casotto, it is still important to carefully choose the base level (category) for each rating variable to be the one with the highest level of exposures within the rating variable when using penalized regression or lasso credibility procedures.

After a model has been produced, it may be rebased to present the output in logical or more appropriate format. A common example of this is most cars have airbags on all sides now, so Surround Airbags may have the most exposures in a Comprehensive coverage model and receive a relativity of 1.000. Older vehicles which have Driver Side Only airbags likely have fewer exposures and may be modeled with say a relativity of 1.250. Rather than saying Driver Side Only airbags receive a 25% surcharge, it may be preferable to advertise a discount of 20% for vehicles which have Surround Airbags. This rebasing has no impact on the model scoring and the rate charged is not impacted as long as the base rate is also adjusted.

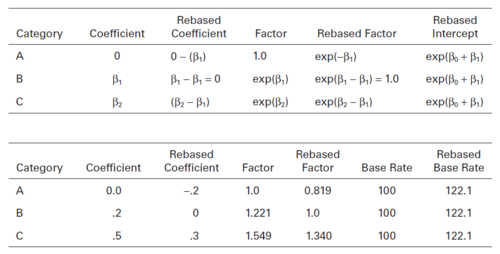

The following chart is taken from the source. We assume a log-link function and divide the model output for the rating variable by the relativity for Category B, [math]e^{-\beta_1}[/math]. Since the rate charged for Category B risks was the Original Base Rate * [math]e^{\beta_1}[/math], to avoid changing the rate charged we must multiply the Original Base Rate by [math]e^{\beta_1}[/math].

Appendix D: Sparsity: A Convex Optimization Perspective

This appendix demonstrates lasso regression achieves sparsity because it fits the signal up to a threshold. It's a fairly dense mathematical proof which, in our opinion, is unlikely to be tested on the exam. We're omitting this material to help you focus on the core content; the source is fairly accessible and brief if you're interested in the technical details.

| Full BattleQuiz | Excel Files | Forum |

You must be logged in or this will not work.